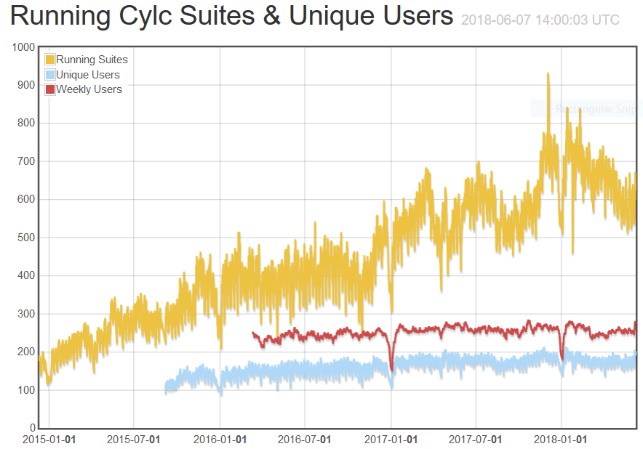

Features, Benefits, & Adoption Worldwide

Hilary Oliver - NIWA - 15 Nov 2018, Dallas Texas

Hilary Oliver

Hilary OliverNIWA (National Institute of Water and Atmospheric Research)

New Zealand

Features, Benefits, & Adoption Worldwide

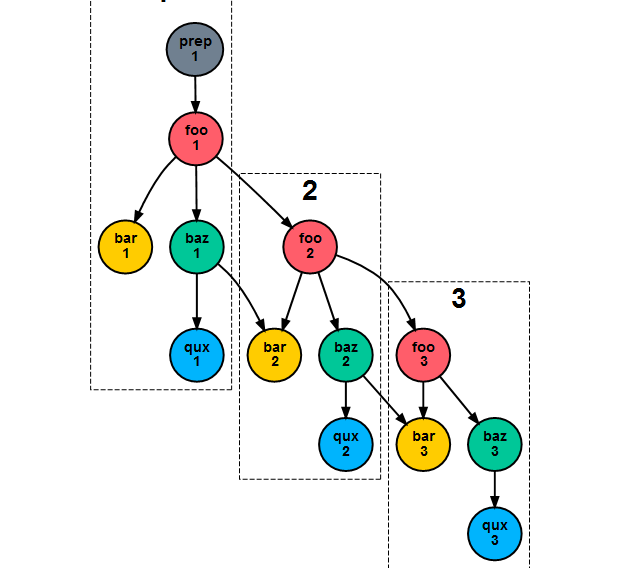

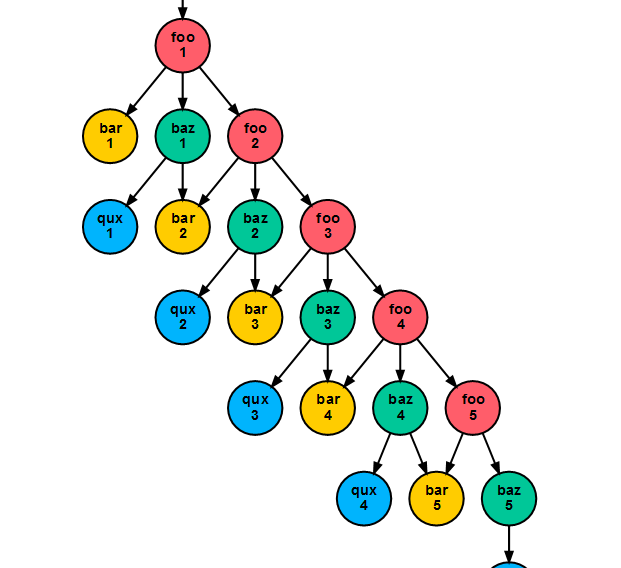

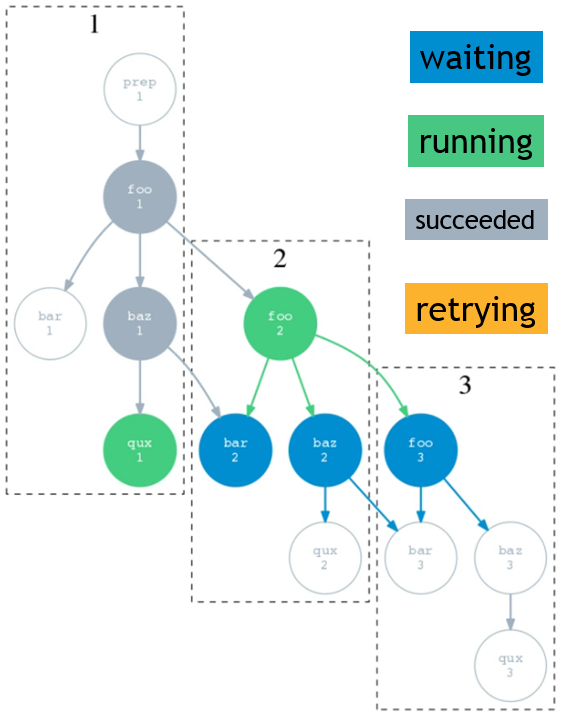

- repeat cycles...

- ...there's inter-cycle dependence between some task...

- ...which is technically no different to intra-cycle dependence...

- ...no need to label cycle points (boxes) as if they have global relevance...

- ... so you can see this is actually an infinite single workflow that happens to be composed of repeating tasks

What's cycling needed for?

- clock-limited real time forecasting

- splitting a long simulation into short chunks

- iterative workflows (e.g. optimize model params)

- processing datasets as concurrently as possible

- classic pipelines!

- (and ... other kinds of repetitive processing)

- dynamic cycling is not strictly needed for small, short workflows.

- historically achieved (NWP) with sequential whole cycles.

What Cylc Is Like

- complex cycling model(*) workflows in HPC

- text config file (with programmatic templating)

- treat workflow definitions like source code

- economy of workflow definition: inheritance, cycling graph notation, templating

- light-weight, flexible, and general

- application agnostic: any script, or program

- move data around however you like

- ANY date-time or integer cycling sequences

- ETC...

Not currently well suited to "data-intensive" workflows

no magic sauce to obscure "what the scientist does"

A workflow is primarily a configuration of the workflow engine, and a config file is easier for most users and most use cases than programming to a Python API. However, ...!

ETC.: event handling, checkpointing, extreme restart, ...

A powerful "unified" CLI

$ cylc --helpe.g. to re-trigger all failed tasks with name

get_* and cycle point 2020*, in suite

expt1 (leaving others alone):

$ cylc trigger expt1 2020*/get_*:failed

note dynamic filtering vs static SMS GUI

# Hello World! Plus

[scheduling]

[[dependencies]]

graph = "hello => farewell & goodbye"

# Hello World! Plus

[scheduling]

[[dependencies]]

graph = "hello => farewell & goodbye"

[runtime]

[[hello]]

script = echo "Hello World!"

# Hello World! Plus

[scheduling]

[[dependencies]]

graph = "hello => farewell & goodbye"

[runtime]

[[hello]]

script = echo "Hello World!"

[[[environment]]]

# ...

[[[remote]]]

host = hpc1.niwa.co.nz

[[[job]]]

batch system = PBS

# ...

# ...

# ...

- [runtime] is a multiple inheritance heirarchy for efficient sharing of all common settings

#!Jinja2

{% set SAY_BYE = false %}

[scheduling]

[[dependencies]]

graph = """hello

{% if SAY_BYE %}

=> goodbye & farewell

{% endif %}

"""

[runtime]

# ...

#!Jinja2

{% set SAY_BYE = true %}

[scheduling]

[[dependencies]]

graph = """hello

{% if SAY_BYE %}

=> goodbye & farewell

{% endif %}

"""

[runtime]

# ...

[[dependencies]]

graph = "pre => sim => post => done"

[[dependencies]]

graph = "pre => sim<M> => post<M> => done"

# with M = 1..5

[[dependencies]]

graph = "prep => init => sim => post => close => done"

[[dependencies]]

graph = "prep => init<R> => sim<R,M> => post<R,M> => close<R> => done"

# with M = a,b,c; and R = 1..3

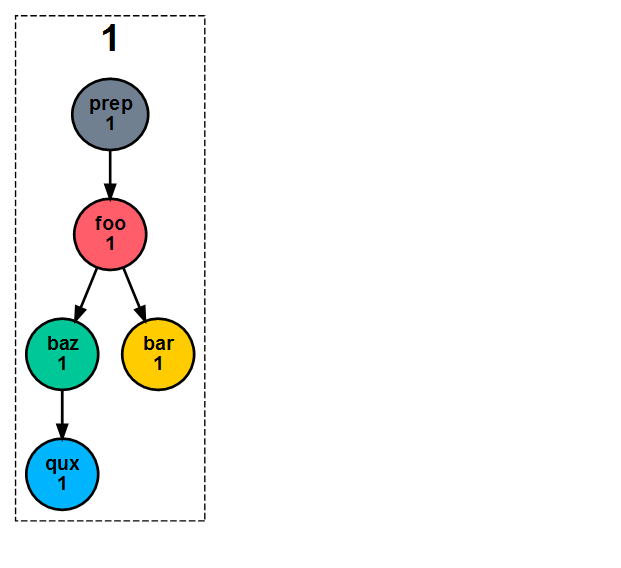

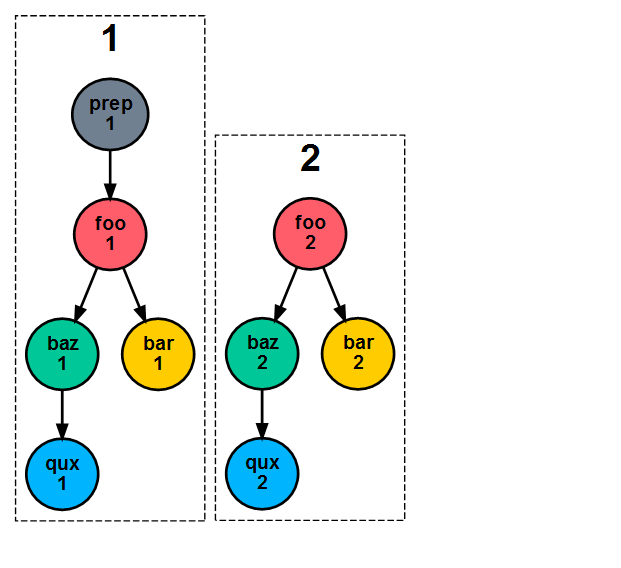

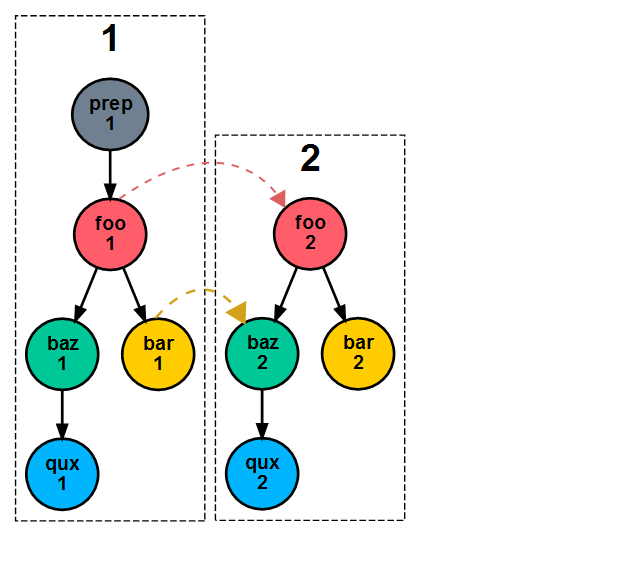

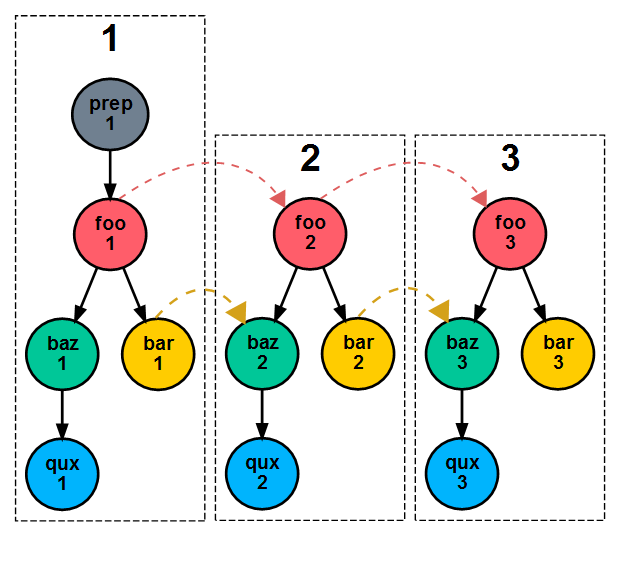

[cylc]

cycle point format = %Y-%m

[scheduling]

initial cycle point = 2010-01

[[dependencies]]

[[[R1]]] # R1/^/P1M

graph = "prep => foo"

[cylc]

cycle point format = %Y-%m

[scheduling]

initial cycle point = 2010-01

[[dependencies]]

[[[R1]]]

graph = "prep => foo"

[[[P1M]]] # R/^/P1M

graph = """

foo[-P1M] => foo

foo => bar & baz => qux

"""

[cylc]

cycle point format = %Y-%m

[scheduling]

initial cycle point = 2010-01

[[dependencies]]

[[[R1]]]

graph = "prep => foo"

[[[P1M]]]

graph = """

foo[-P1M] => foo

foo => bar & baz => qux

"""

[[[R2/^+P2M/P1M]]]

graph = "baz & qux[-P2M] => boo"

other features

- optimal catch-up from delays

- robust inter-workflow triggering

- state checkpointing and magic restart

- general event handling

- external event triggers (NEW)

- suite auto-migration! (NEW)

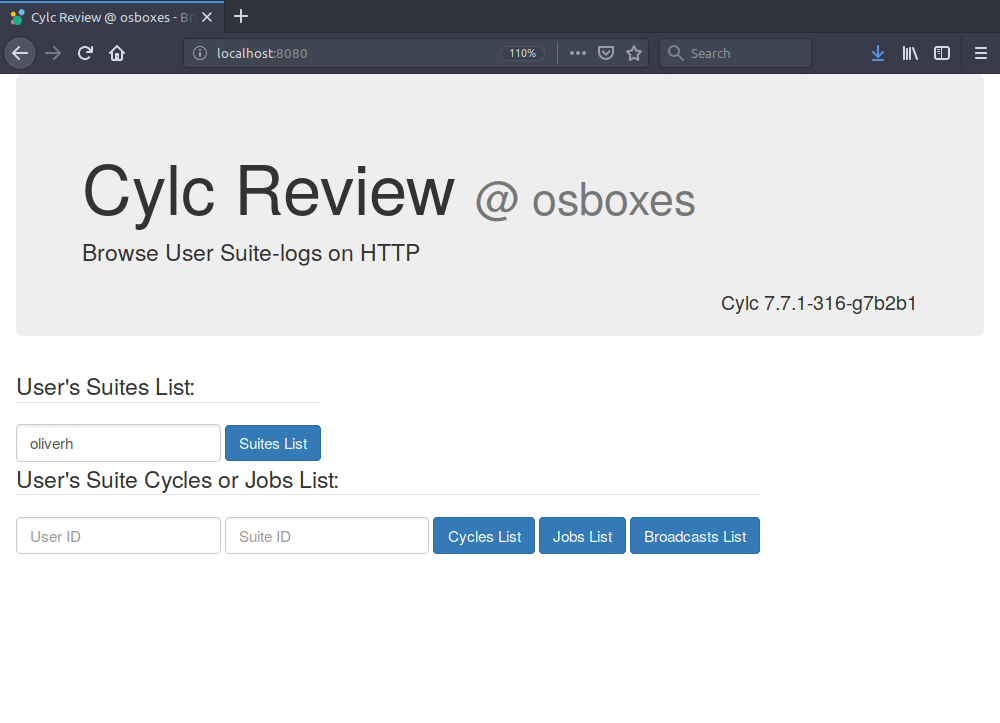

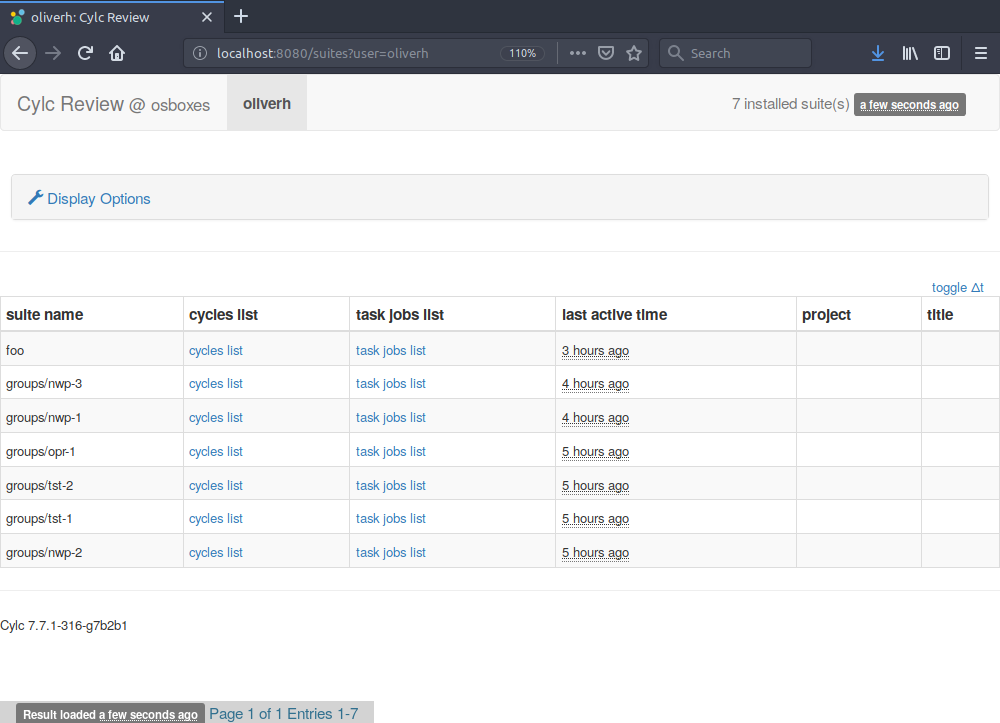

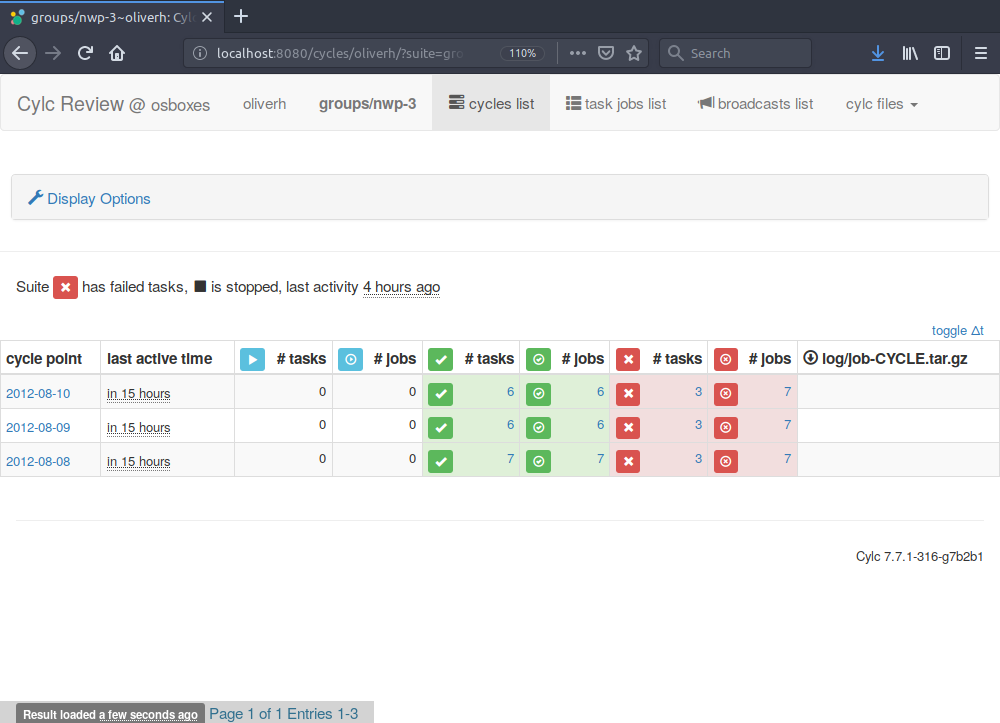

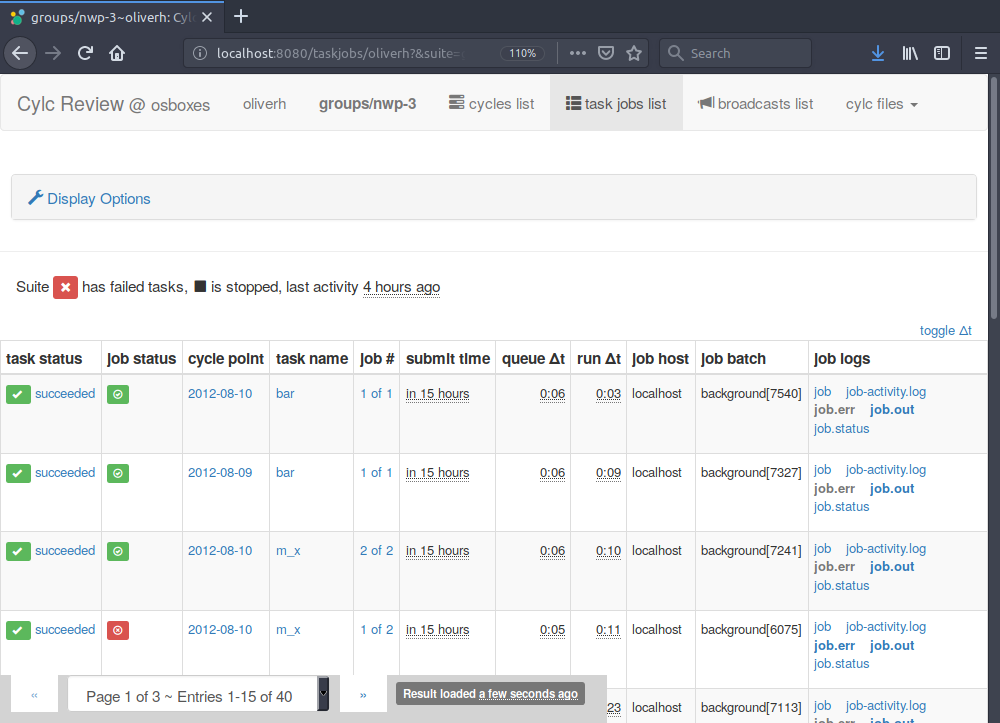

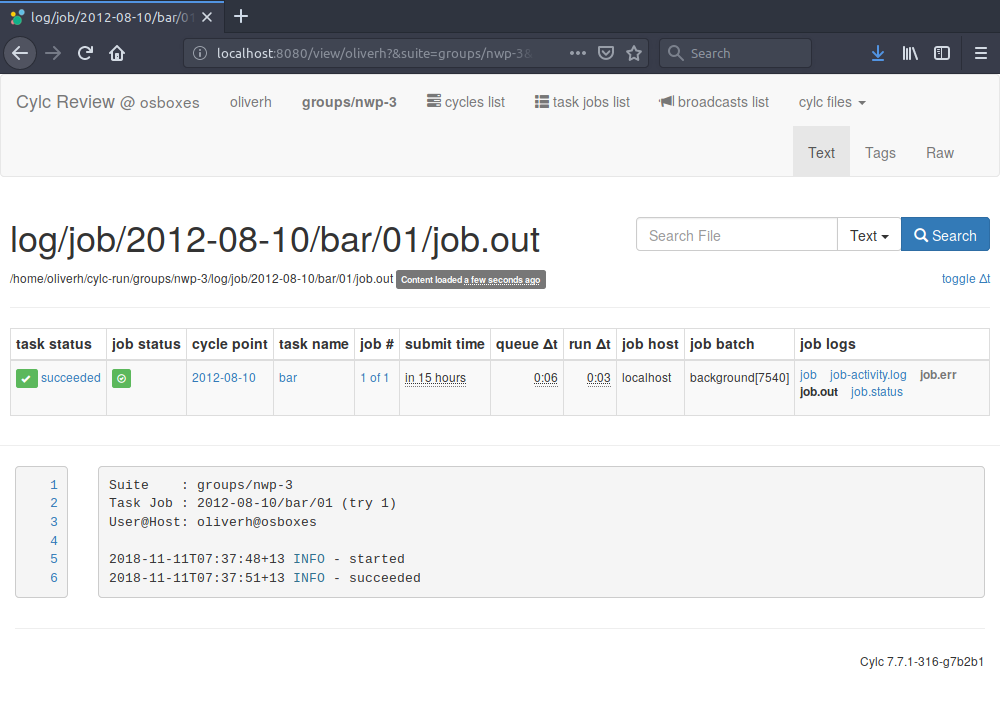

cylc review- job log viewer (NEW)- production tested!

- ...

event handling: includes built-in aggregated emails

robust inter-workflow triggering: via suite DB not server (important for transient distributed suites)

production tested: recovery from hall failures

Features, Benefits, & Adoption Worldwide

(See FEATURES!)

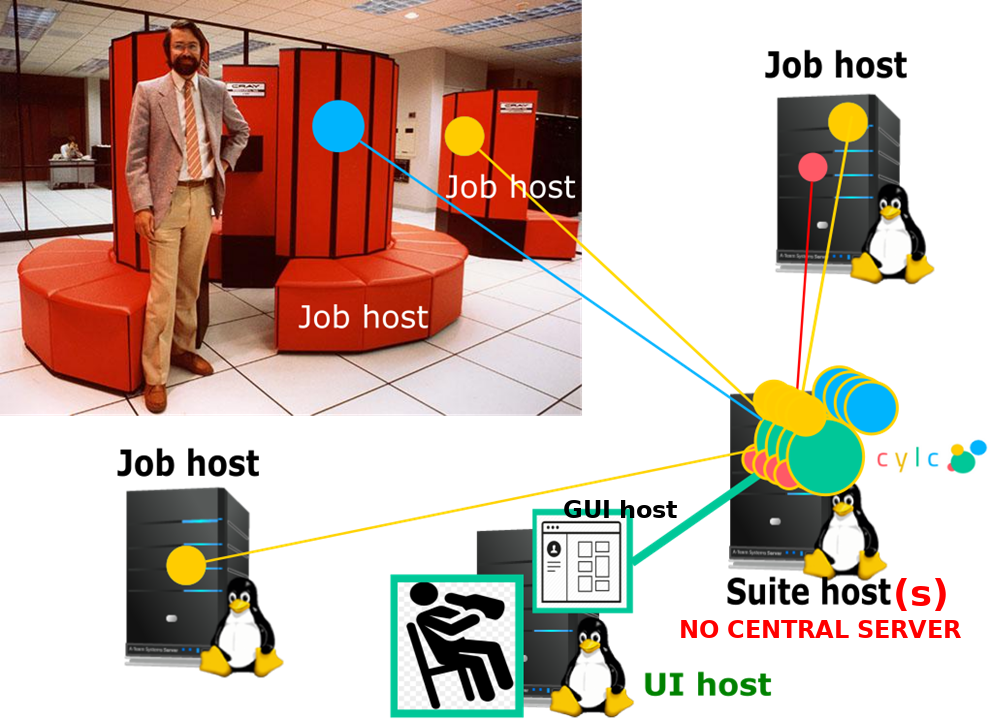

no central server (distributed workflows)

- scales sideways (pool of Cylc servers/VMs)

- easy system upgrades (once suite at a time)

- light-weight, low admin

- easy for research teams and individuals

correct handling of cycling

- seamless transition from behind-the-clock, to clock-limited operation (and vice versa)

- seamless research-production transition

- very fast catch-up from delays (depending)

- job failures don't affect upstream tasks at all

research - production: also involves other aspects: configurable workflow definitions (switch bits on and off) - but primarily, don't need to run clock-limited in research.

economy of workflow definition

- cycling graph notation - infinite graphs

- multiple inheritance through task families

- share ALL common configuration

- mass triggering of task families

- Jinja2 templating

- parameterized tasks

(duplicated config is a maintenance risk)

ease of use

- download cylc(*) to your laptop, run it

- Cylc makes moderate workflows EASY

- to write

- to understand at a glance

- intuitive graph notation

- workflow graph visualization

- ...and large complex workflows possible

(*) caveat: software dependencies and PyGTK; proper pip and conda packaging soon

ease of use: note academic community and ESiWACE support

production support

- general event handling (incl. aggregate emails)

- robust inter-workflow triggering(*)

- robust checkpoint restart

- automigration! (NEW)

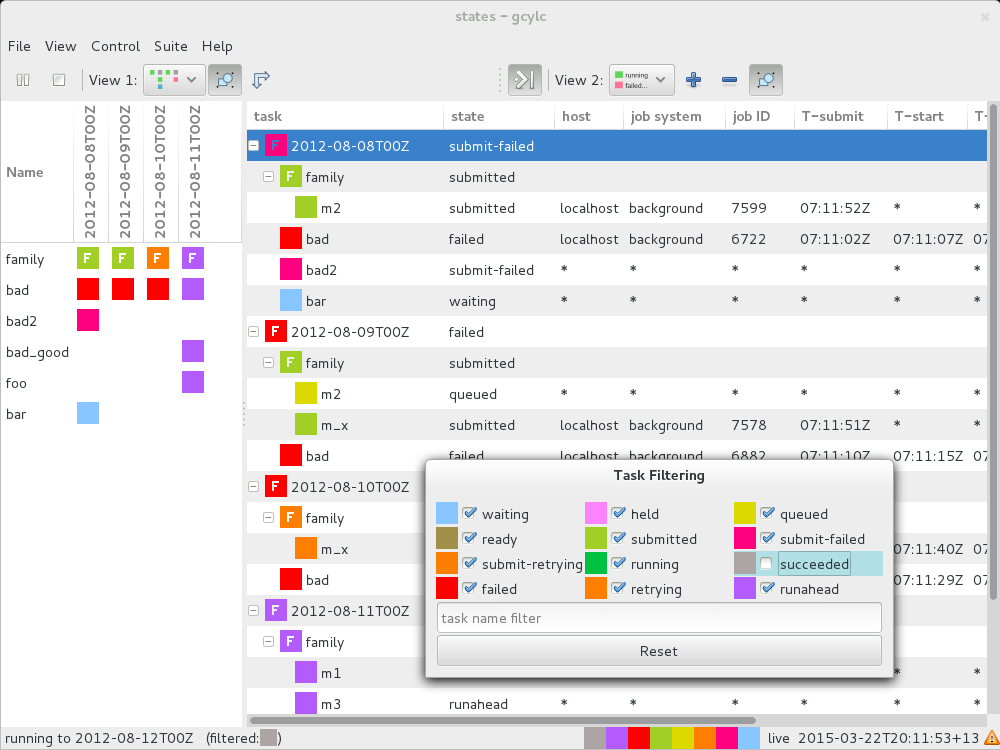

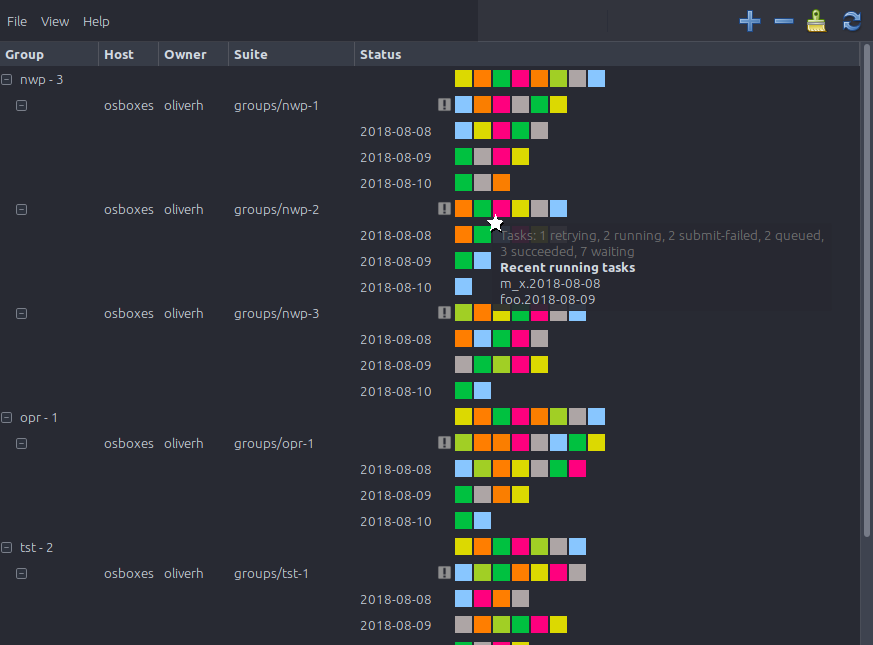

- multi-suite monitoring

- products of the Altair BOM project!

and finally ...

- active Open Source project

- commercial support and packaging from Altair!

Features, Benefits, & Adoption Worldwide

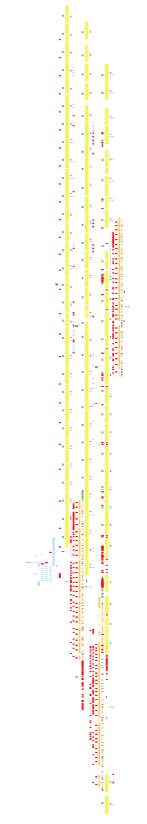

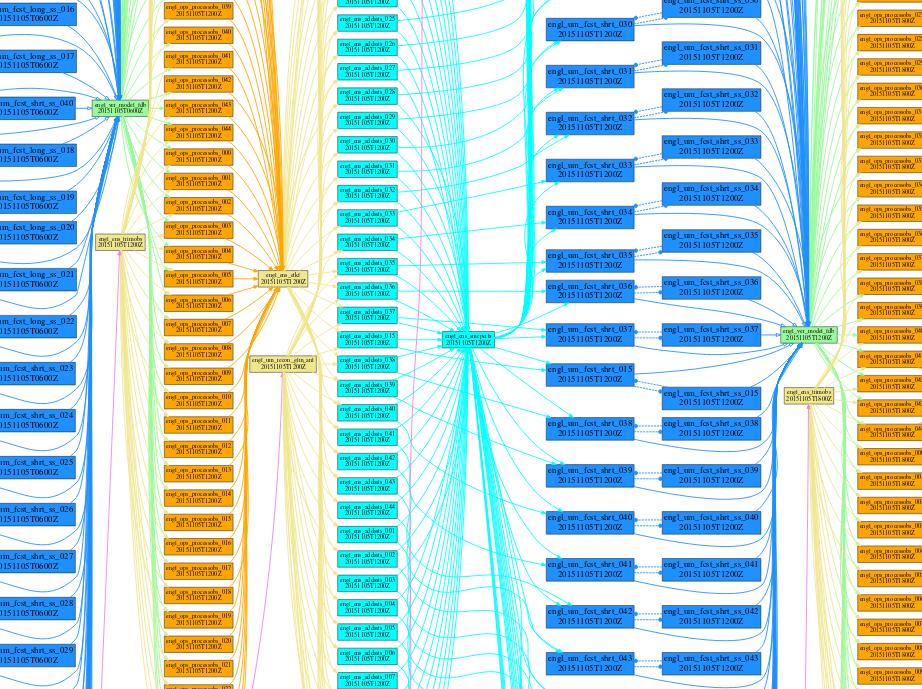

lights-out operation since 2011; 25 inter-dependent model suites (X 2)

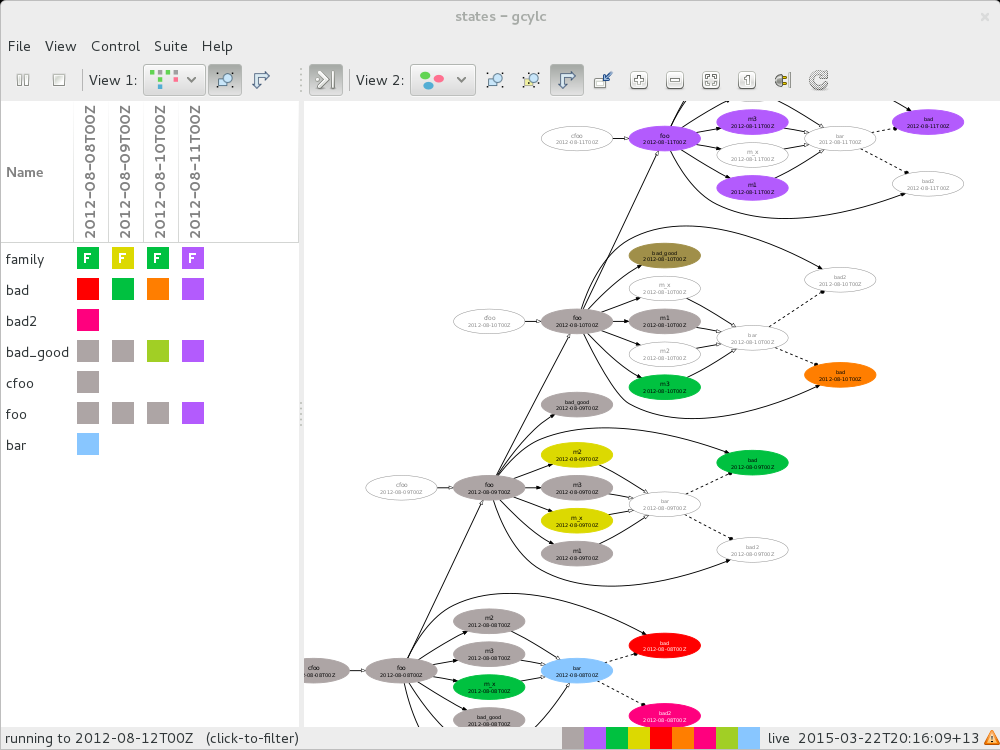

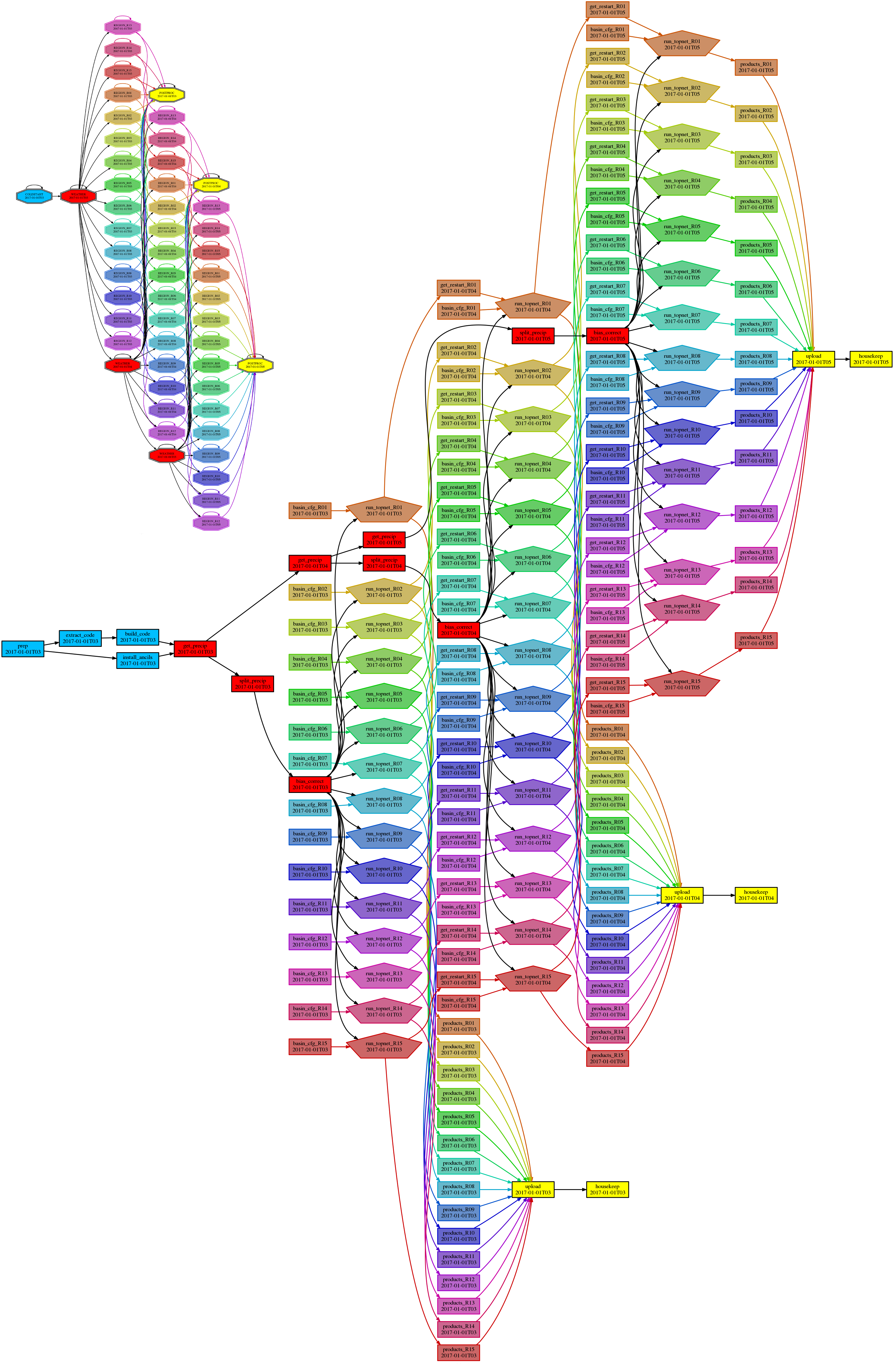

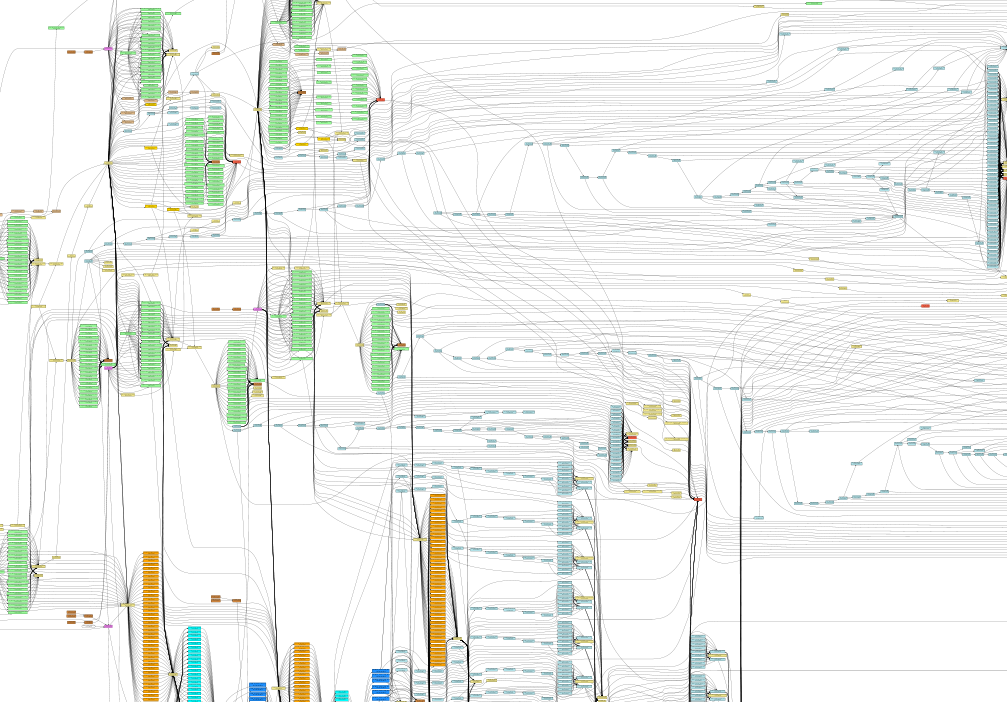

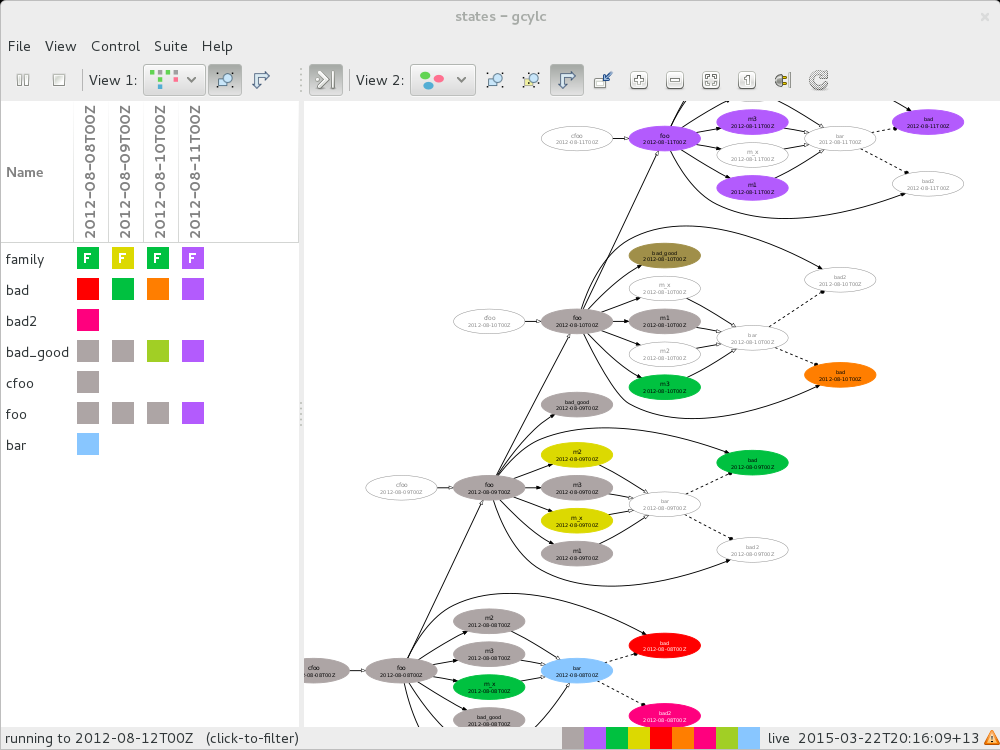

- 3 cycles of a small deterministic regional NWP suite. Obs processing tasks in yellow. Atmospheric model red, plus DA and other pre and post-processing tasks A few tasks ... generates thousands of products from a few large model output files.

- ... ~45 tasks (3 cycles)

- ... as a 10-member ensemble, ~450 tasks (3 cycles)

- ... as a 30-member ensemble, ~1300 tasks (3 cycles)

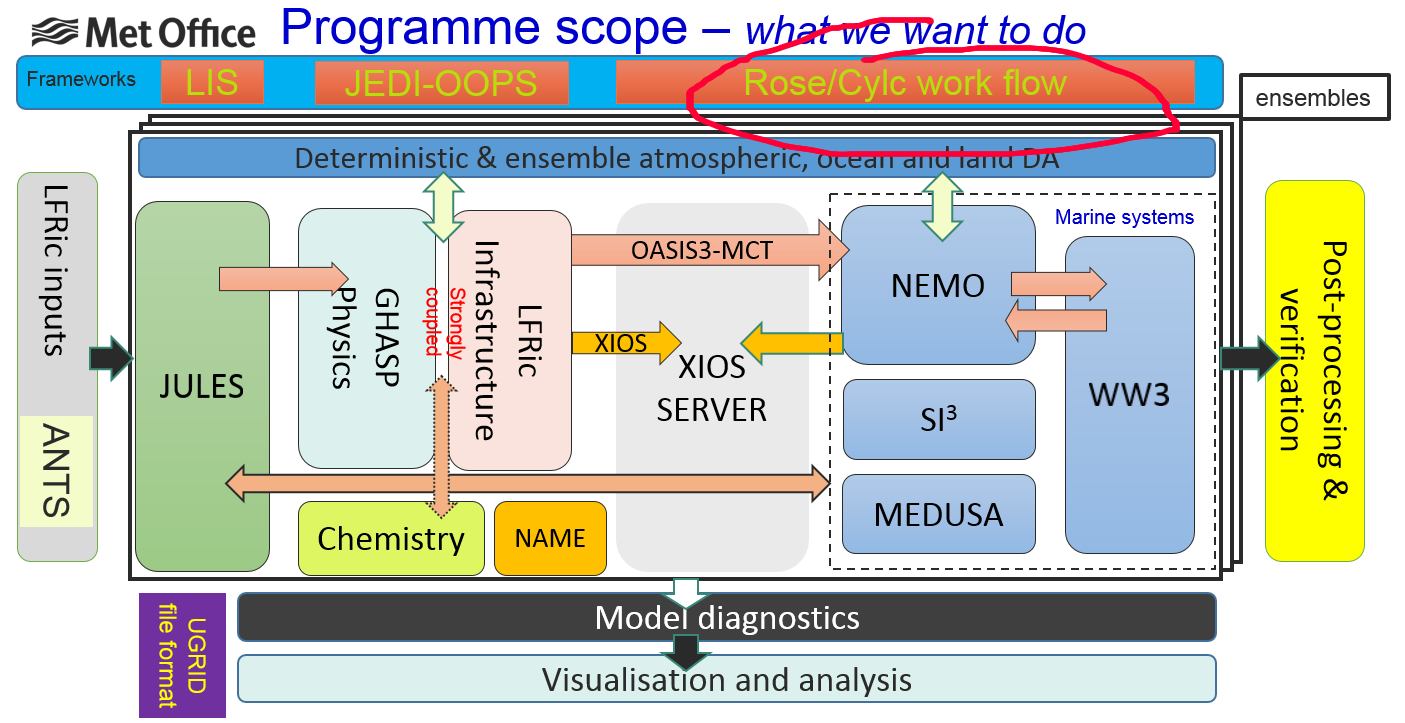

Features, Benefits, and Adoption Worldwide =====ROADMAP=====

UK Met Office Exascale Program

This is a technical necessity, to survive into the exascale era!

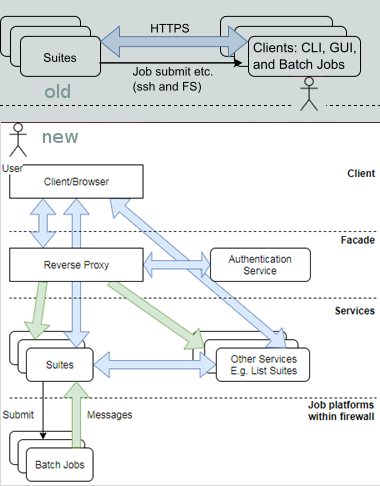

Python 3, Web GUI

- top priority

- Python 2 and PyGTK near end-of-life

- web GUI work starting

- need new architecture!

Meeting Exascale Challenges

An outline of some potential pathways for future development

Slides Credit: Oliver Sanders

Slides Credit: Oliver Sanders

Met Office (UK)

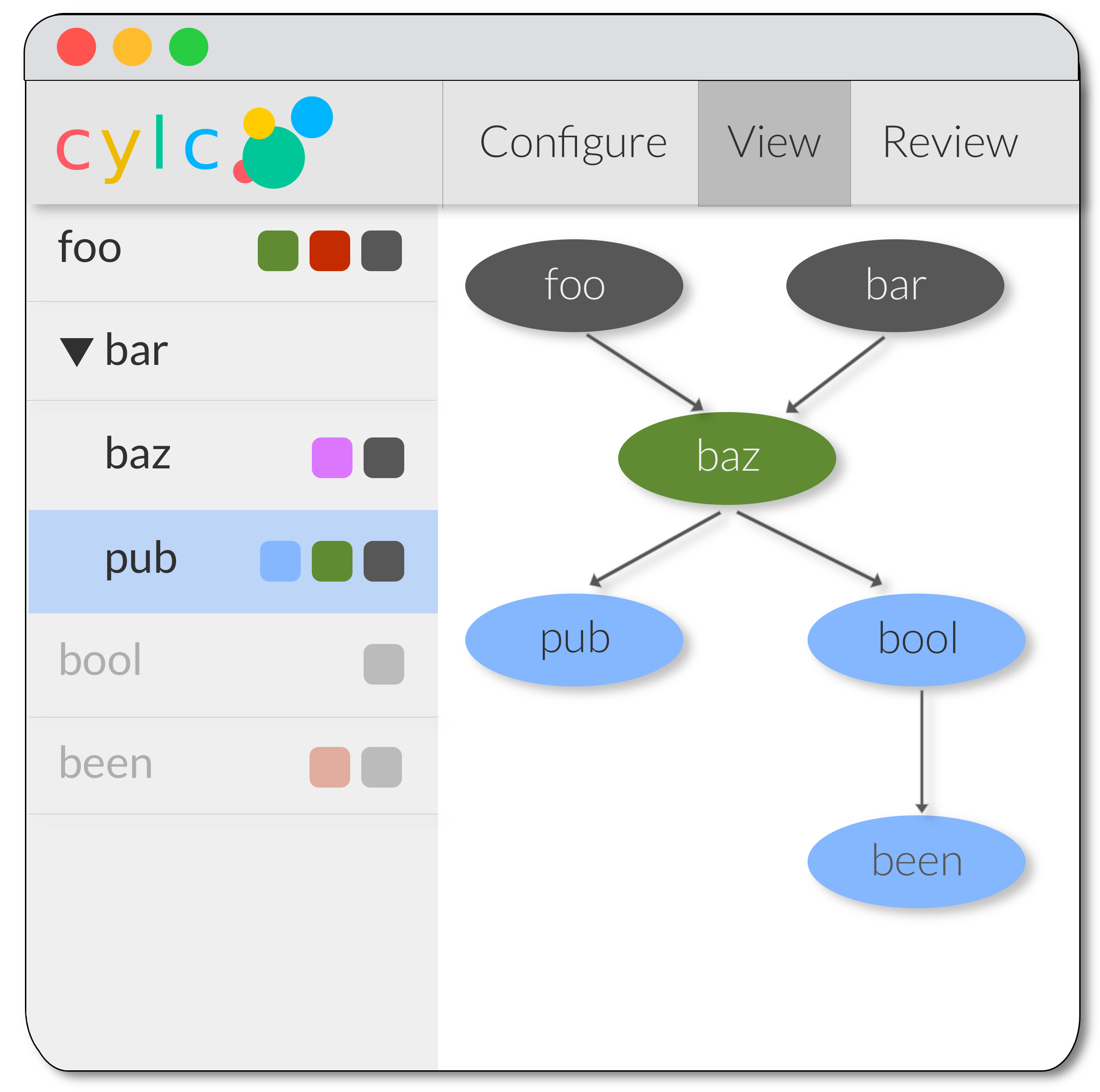

New Cylc GUI

Combine gscan & gcylc

View N Edges To Selected Node

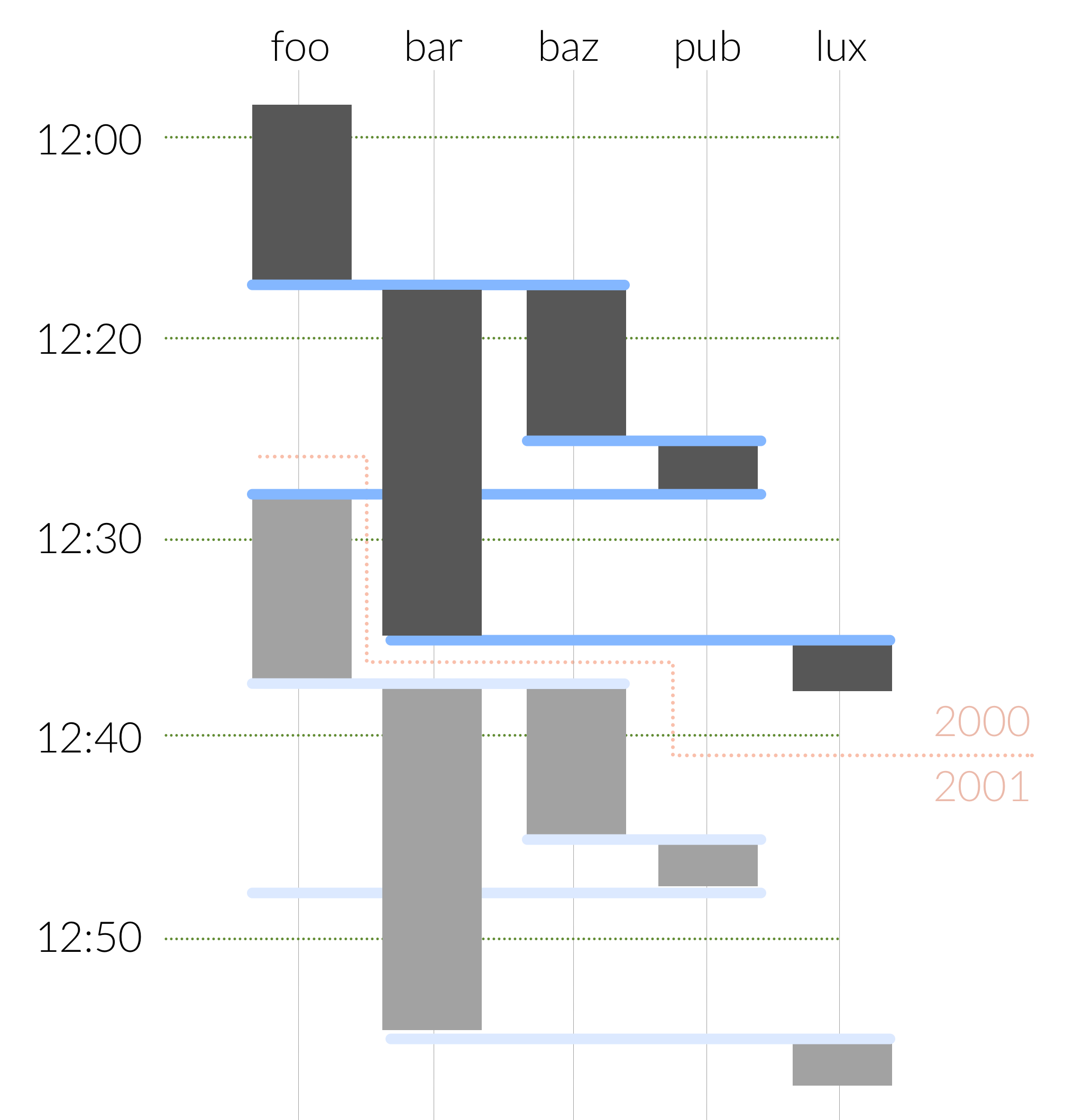

Alternative Views

- Gant chart

- Adjacency matrix

- Other?

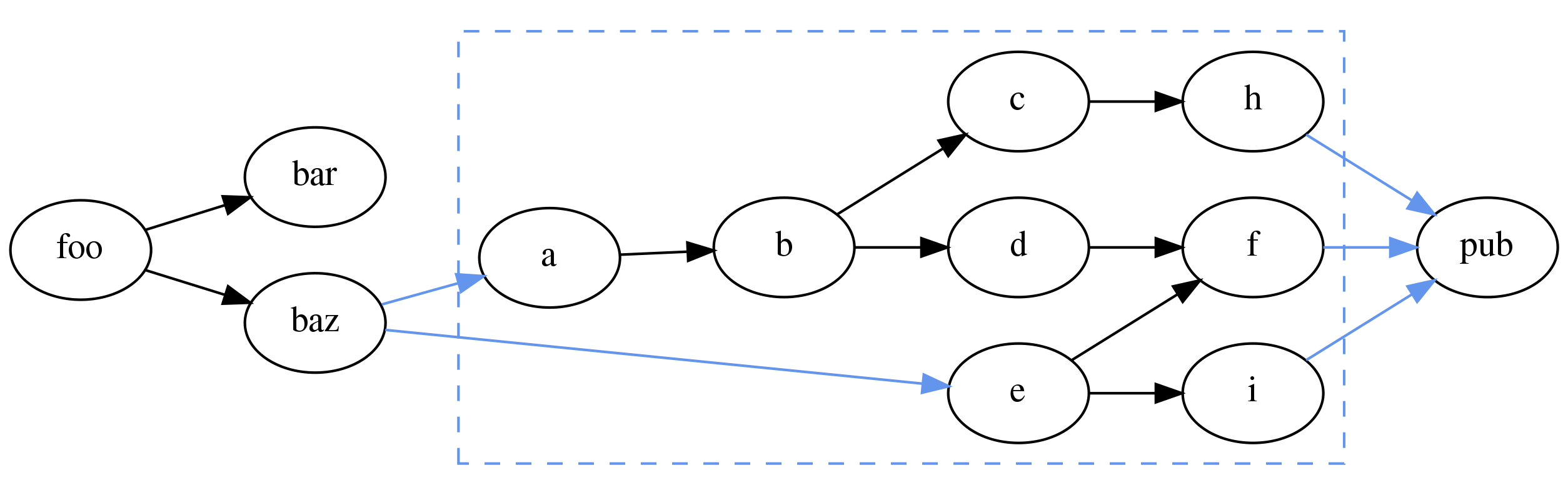

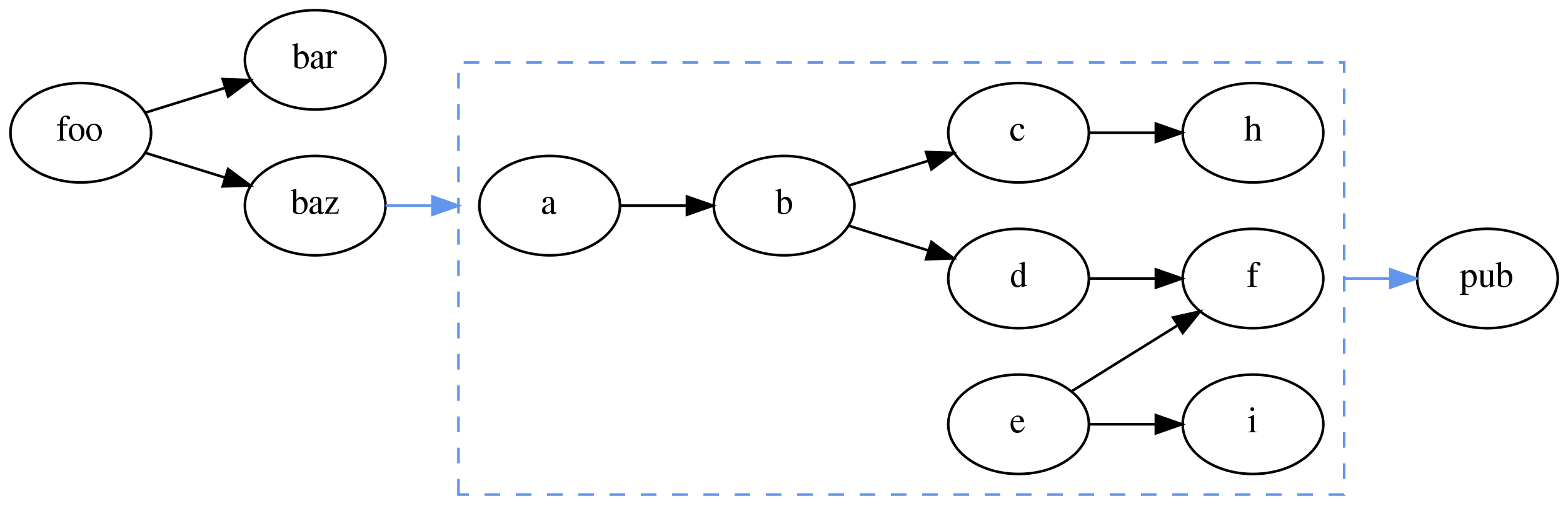

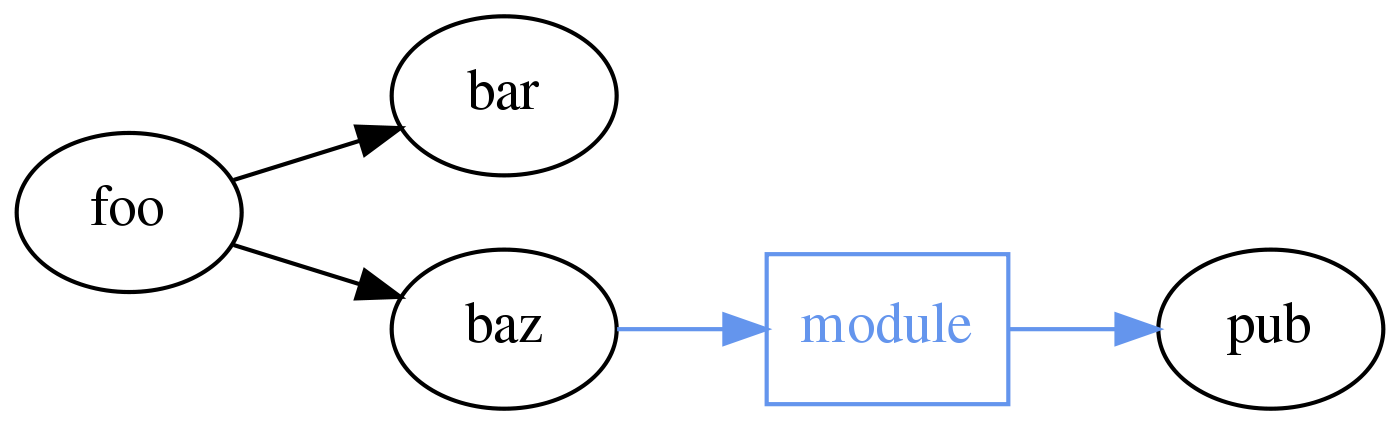

The Modularity Problem

- We can split the suite.rc into multiple files but...

- Suites are monolithic

- Developing components in isolation is difficult

It's hard to incorporate a module into a workflow

Ideally we would write dependencies to/from the module itself rather than the tasks within it

Workflows could be represented as tasks

foo => baz => module<p> => pub

Python API

Python > Jinja2

Illustrative examples Python could provide Cylc:

bar = cylc.Task('myscript')

cylc.run(

foo >> bar >> baz

)Use Python data structures as Cylc parameters:

animal = cylc.Parameter({

'cat': {'lives': 9, 'memory': 2},

'dog': {'lives': 1, 'memory': 10}

})

baz = cylc.TaskArray('run-baz',

args=('--animal', animal),

env={'N_LIVES': animal['lives']}),

directives={'--mem': animal['memory']}

)Use Python to write Cylc modules:

import my_component

graph = cylc.graph(

foo >> bar >> my_component >> baz,

my_component.pub >> qux

)Alternative Scheduling Paradigms

foo => bar => baz

foo:

out: a

bar:

in: a

out: b

baz:

in: a, b

out: c

- Compute resource dependency?

- Others?

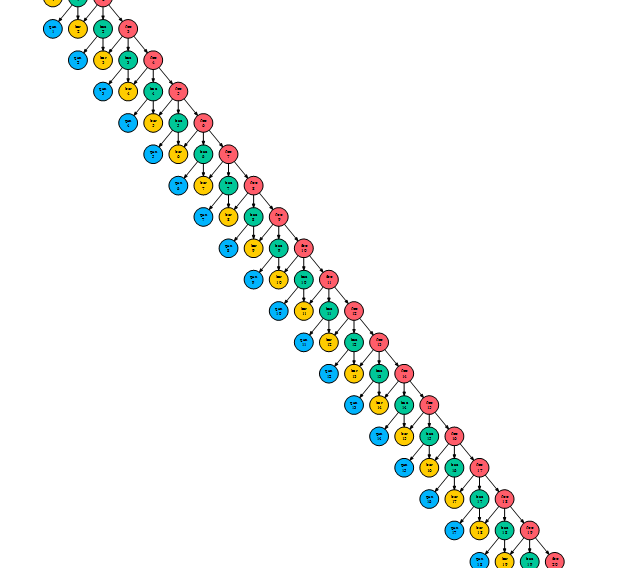

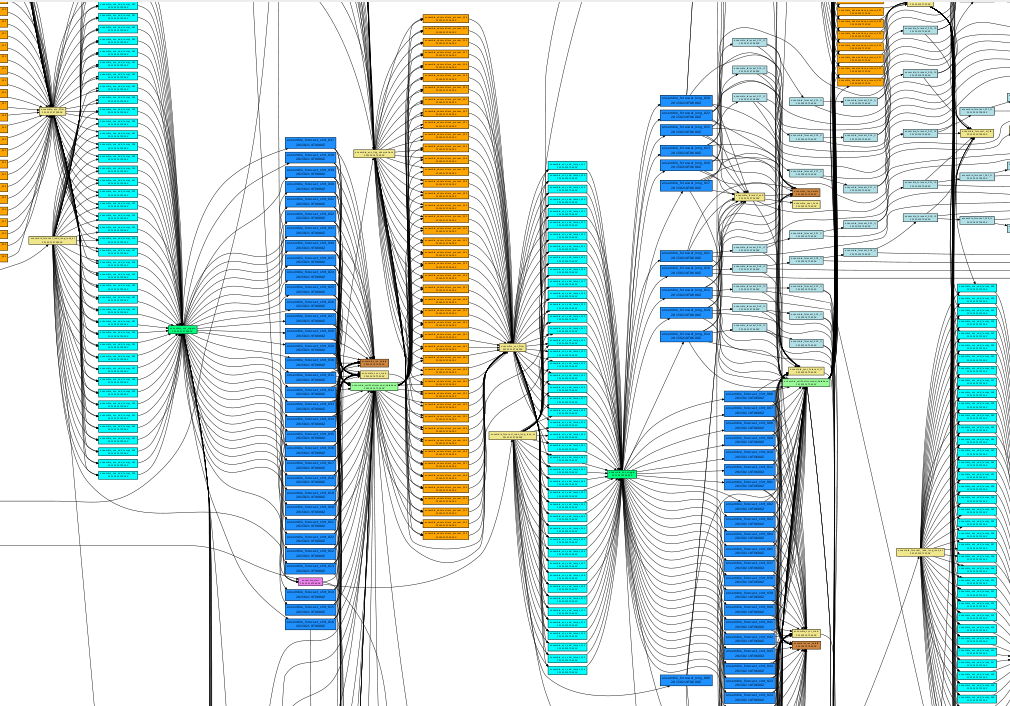

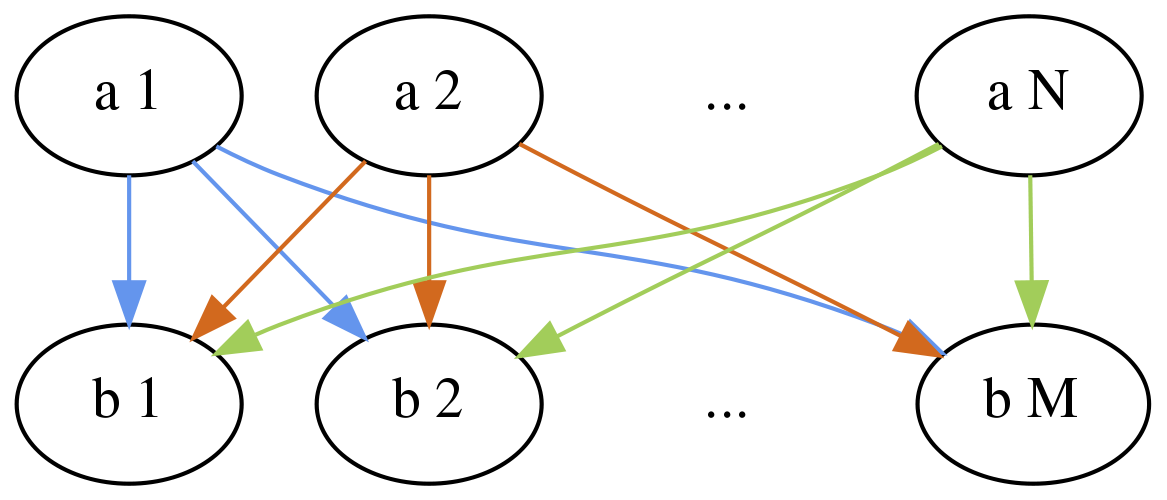

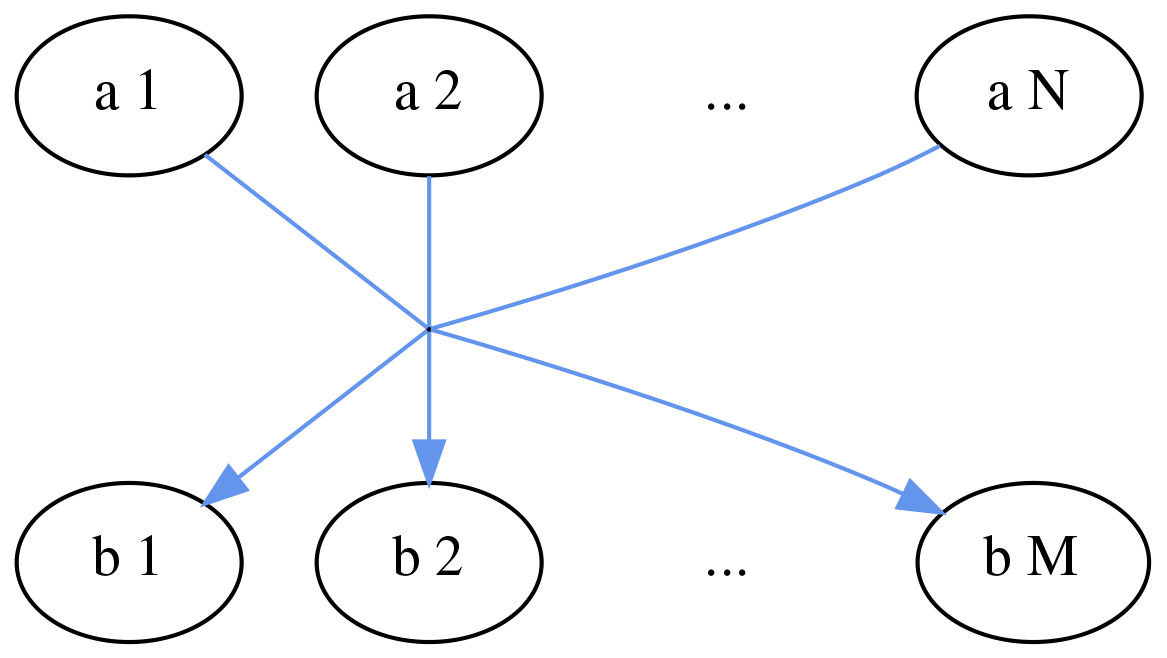

Scaling With Dependencies

Cylc can currently scale to tens of thousands of tasks and dependencies

But there are limitations, for example:

Many to many triggers result in NxM dependencies

Cylc should be able to represent this as a single dependency

The scheduling algorithm currently iterates over a "pool" of tasks.

We plan to re-write the scheduler using an event driven approach.

This should make Cylc more efficient and flexible model solving problems like this.

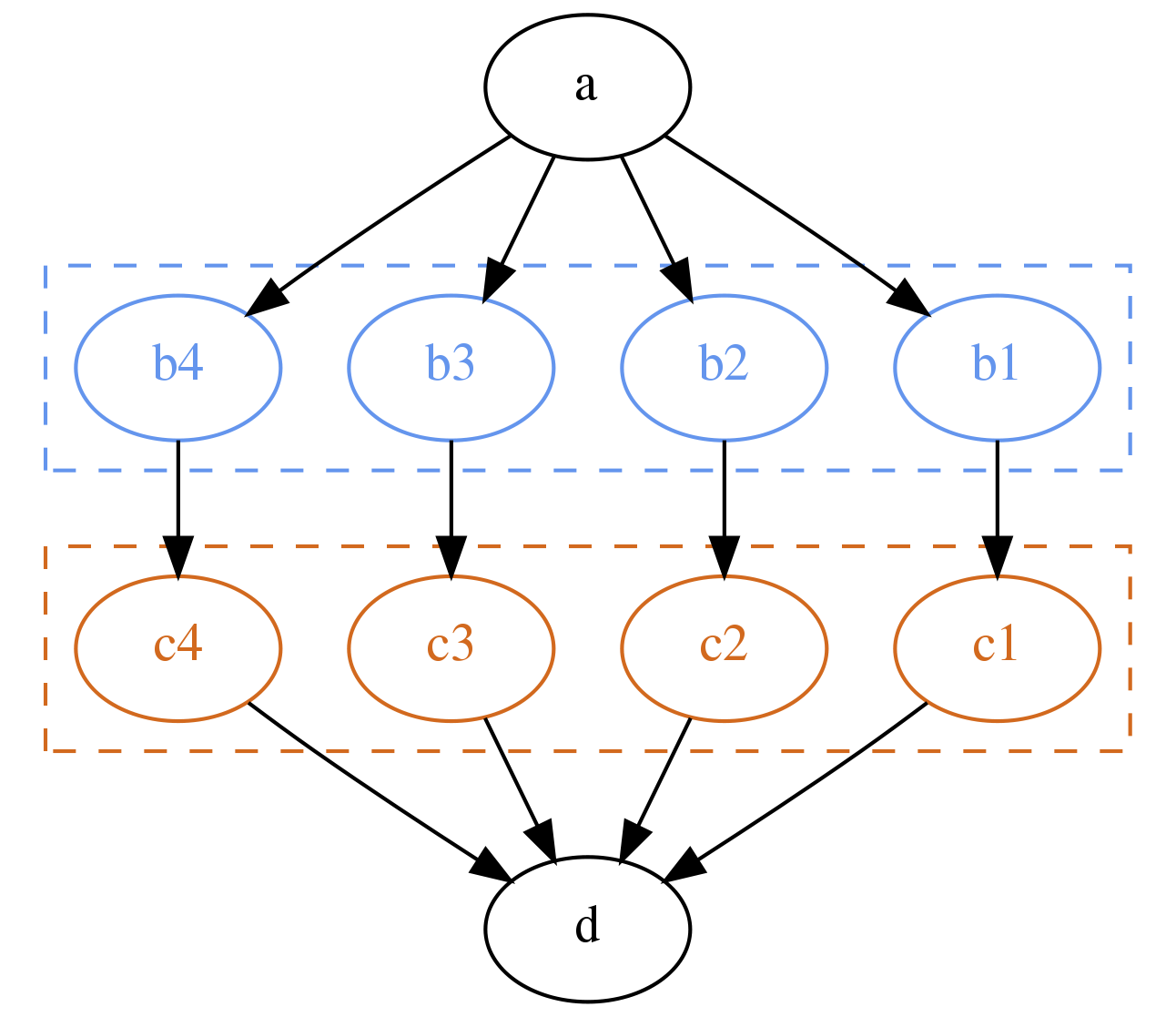

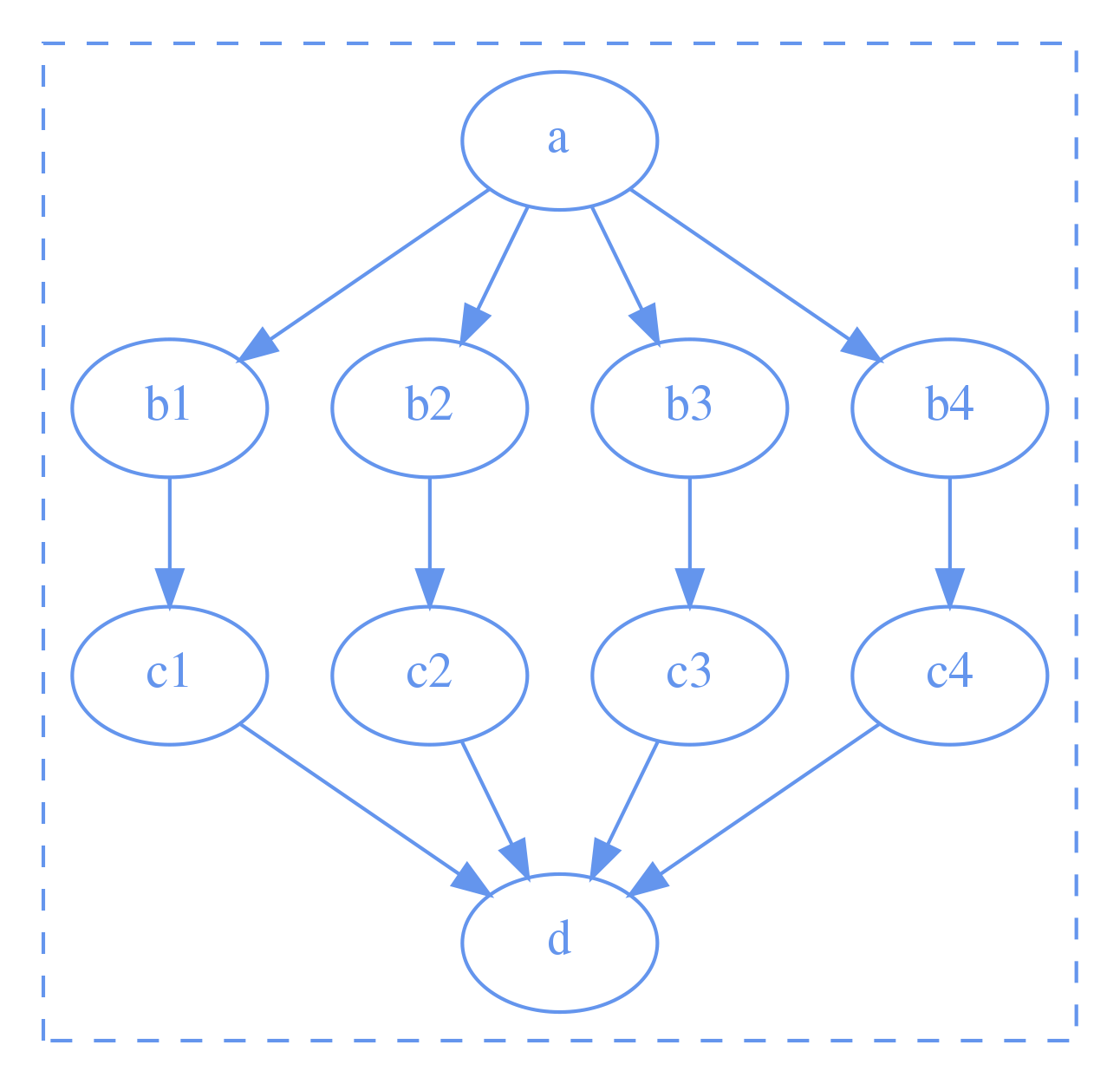

Kernel - Shell Architecture

Working towards a leaner Cylc we plan to separate the codebase into a Kernel - Shell model

Shell |

Kernel |

| User Commands | Scheduler |

| Suite Configuration | Job Submission |

Batching Jobs

Combining multiple jobs to run in a single job submission.

Arbitrary Batching

A lightweight Cylc kernel could be used to execute a workflow within a job submission.

- The same Cylc scheduling algorithm

- No need for job submission

- Different approach to log / output files

Future Challenges

- Container technology

- Computing in the cloud

- File system usage